GPU parallel computing for machine learning in Python: how to build a parallel computer (English Edition) eBook : Takefuji, Yoshiyasu: Amazon.es: Tienda Kindle

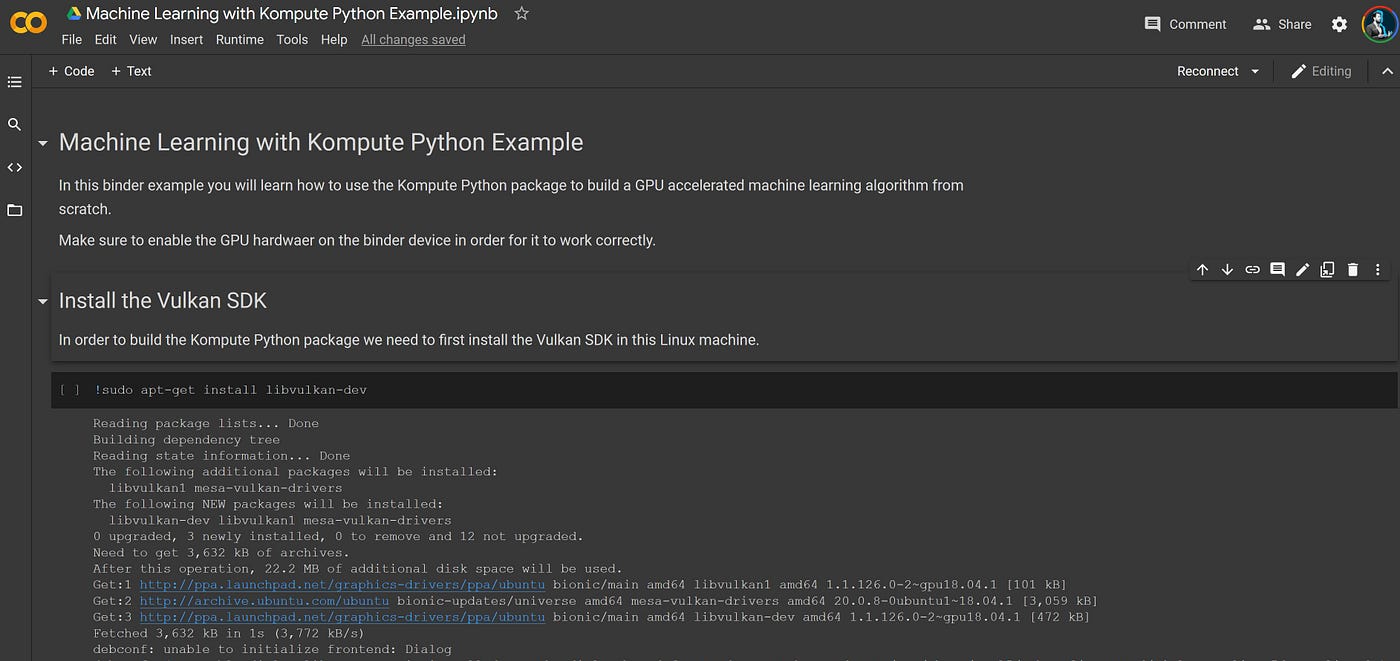

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

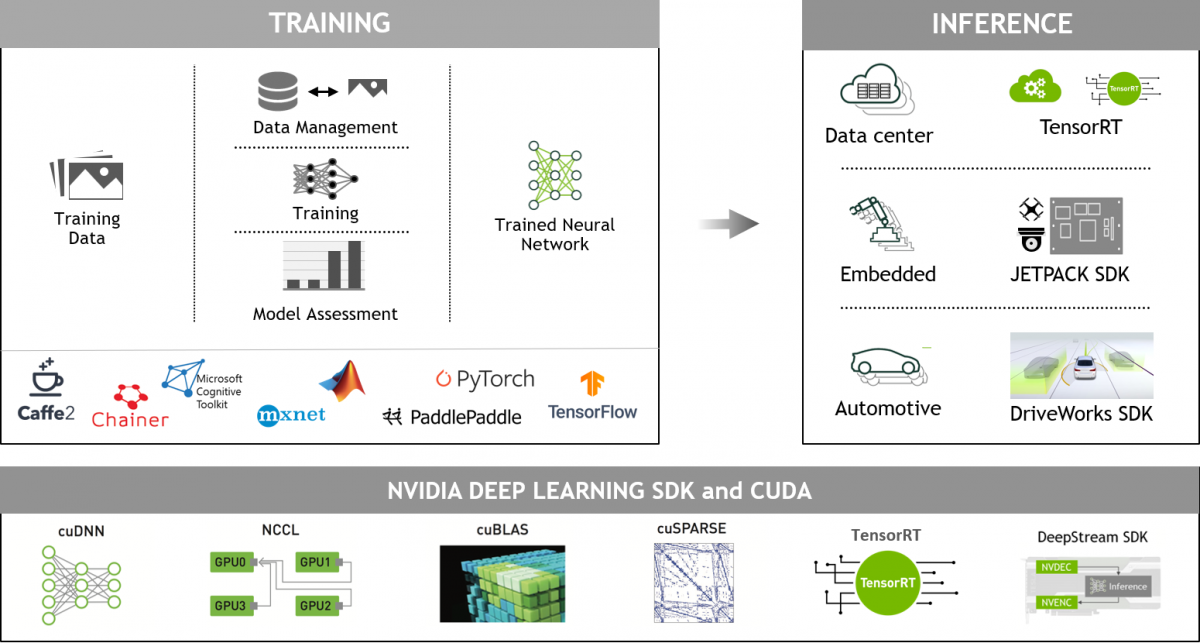

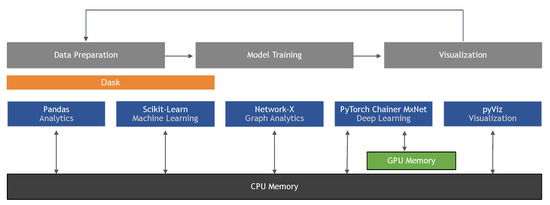

Machine Learning in Python: Main developments and technology trends in data science, machine learning, and artificial intelligence – arXiv Vanity

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

GPU parallel computing for machine learning in Python: how to build a parallel computer : Takefuji, Yoshiyasu: Amazon.es: Libros

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science